UDiscover Elephant Calls

Task: to listen to short clips of forest audio while viewing the corresponding spectrograms, and mark elephant calls that may be present within them.

The elephant calls project is a collaboration between the Institute for Computational Sustainability (ICS) and the Elephant Listening Project (ELP), part of the Cornell Lab of Ornithology's Bioacoustics Research Program.

The Elephant Listening Project (ELP) is a non-profit research venture using vocal activity to monitor the status and activities of African forest elephants. Capturing their sounds allows researchers to study aspects of their lives that are impossible to observe directly due to their dense forest habitat.

Current projects include using vocal behavior to monitor the activity of elephants in Gabon; collaborating with a leading expert on forest elephant behavior, Andrea Turkalo, to investigate the social dynamics of a population in the Central African Republic; and keeping an ear out for poaching in Central African forests.

Rumbles

Elephants produce a variety of vocalizations, but the most common are rumbles. These low-frequency sounds travel farther than high frequency ones, making them ideal for long distance communication. While most rumbles are audible to humans, ELP founder Katy Payne discovered that elephants also produce infrasonic vocalizations (below the level of human hearing), and has since pioneered studies on communication systems in the world's largest land mammal.

Autonomous Recording Units

Recording these sounds requires equipment that is sensitive to low frequencies, including microphones, preamplifiers and recording devices. ELP uses a specialized unit called an Autonomous Recording Unit (ARU) developed by engineers in the Cornell University Bioacoustics Research Program.

Credit: Elephant Listening Project

Pitch Shifting

Speeding up the audio recording sample subsequently raises the frequencies in the recording. By raising the frequency of the audio to a level that humans can hear, it is possible to uncover infrasonic elephant calls within the recording sample.

Spectrograms

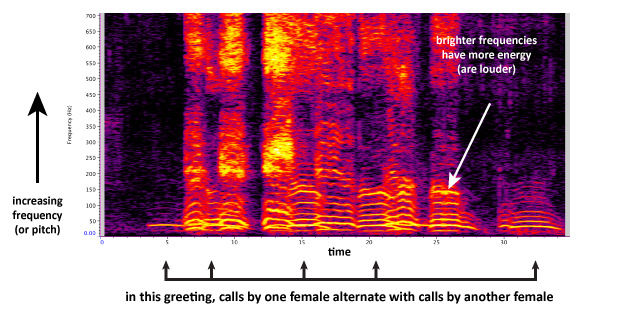

Sounds can also be represented visually using spectrograms. Spectrograms graph frequency on the y-axis, time on the x-axis and represent loudness of sound by the darkness of the display. A call typically appears as a stack of crescent-shaped lines in the spectrogram.

The ELP explanations of elephant language and variety of calls provide examples of elephant vocalizations, associated spectrograms, and accompanying video.

One of our main goals is to develop methods to automatically identify elephant calls in recordings, or even in real-time within a recording device, using supervised learning, which is a class of techniques used to find structure in data from labeled examples. One such method is deep learning, which represents data examples with many layers of nonlinear transformations that extract a combination of local features and higher structure. Fitting these models typically requires large amounts of labeled data.

Human Intelligence Tasks (HITs)

Try listening to short clips of forest elephant recordings and drawing boxes around time segments on the spectrogram that contain elephant calls.